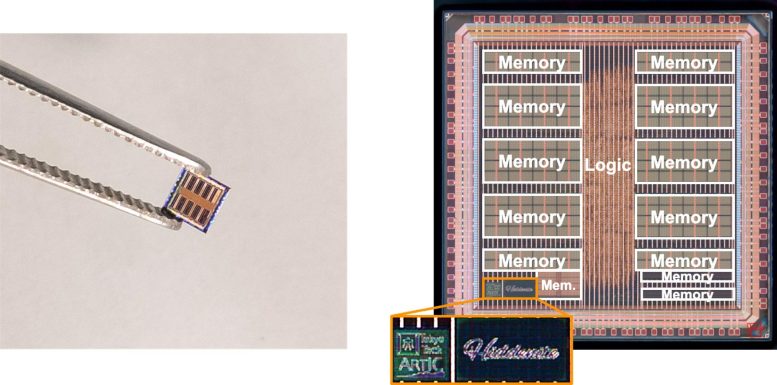

Hiddenite, an accelerator chip developed by the Tokyo Tech researchers. This lowers the computational burden while calculating sparse ‘hidden neural networks.’ The Hiddenite chip drastically brings down the use of external memory while enhancing the entire computational efficiency.

Deep Neural Networks (DNNs) is a subset of the machine learning architecture that requires various parameters to learn and predict outcomes. However, DNNs can be ‘pruned,’ where the model size can be reduced for less computational burden.

The proposal of the ‘lottery ticket hypothesis’ was one of the best ideas imposed to mitigate the issues derived from DNNs. Based on the hypothesis, any subnetworks from the randomly initiated DNN can achieve the same accuracy as that of the original DNN after training.

But using any traditional DNN accelerator consumes more power in the name of higher performance. This led to the development of ‘Hiddenite’ from the researchers of the Tokyo Institute of Technology, led by Professor Masato Motomura and Jaehoon Yu. These AI processors are specifically curated to calculate the hidden neural networks with a significantly reduced power consumption.

More on the Hiddenite AI Processor

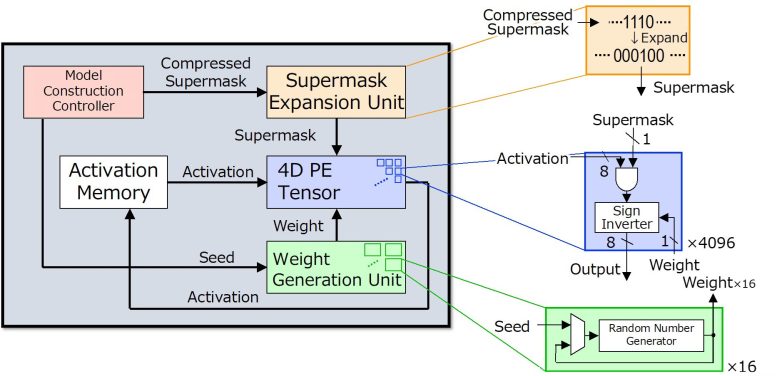

The Hiddenite is the abbreviation for ‘Hidden Neural Network Inference Tensor Engine.’ The Hiddenite is the first-ever HNN inference chip developed. Based on its architecture, the Hiddenite can significantly reduce any external memory access while achieving higher energy efficiency.

Not to mention, the addition of ‘on-chip supermask expansion’ also reduces the number of supermask required by the accelerator. Lastly, the addition of a high-density 4D parallel processor offers the maximum reuse of data, resulting in a more efficient chipset.

According to professor Motomura, “these benefits of the Hiddenite chip gives them an upper hand over any other existing DNN inference accelerator”. The introduction of ‘Score distillation’, a new training method actually allows the conventional knowledge weights to distill over the score, as hidden neural networks never updates the weight.

The Bottom Line

This goes without saying, Hiddenite has created a milestone in the domain of AI processing. Not to mention, the successful exhibition of how a real silicon chip can bring forth efficiency can drastically shift the world of Artificial intelligence and Machine learning.

After all, the sole purpose is to create faster, more efficient, and environment-friendly computational chips. So, let us know in the comment, what are your thoughts on the development of Hiddenite?